Some things in life we ignore. For example, the “check engine” light. That’s just there as a suggestion, right?

But when you’re performance tuning, you can’t afford to ignore the warning signs. I can’t count the number of times that I’ve found the issue with a query by looking at the warnings.

Enough talk, let’s look at an example

Let’s create a table of Students, with just one column.

CREATE TABLE [dbo].[Students]([StudentName] [varchar](100) NULL)

GO

CREATE CLUSTERED INDEX ix_Students_Name

ON Students(StudentName)

GOI need some dummy data to really increase the numbers here, so I’ll load in the names from StackOverflow2010.

INSERT INTO Students(StudentName)

SELECT DisplayName

FROM StackOverflow2010.dbo.UsersGreat! About 300,000 rows were loaded into my new table. Now I need to write a query. How about this query?

SELECT StudentName

FROM Students

WHERE StudentName = N'Arthur'35 rows returned! Disclaimer, none of those users are actually me. This post is about the execution plan, so let’s retrieve the execution plan along with the io statistics by using set statistics io on.

Table 'Students'. Scan count 1, logical reads 910, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

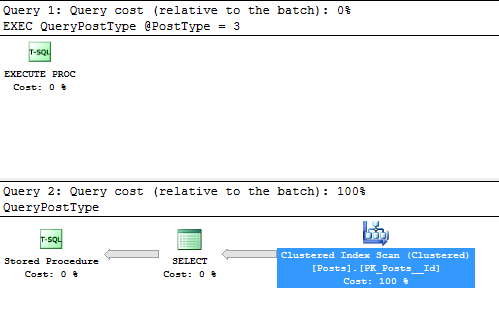

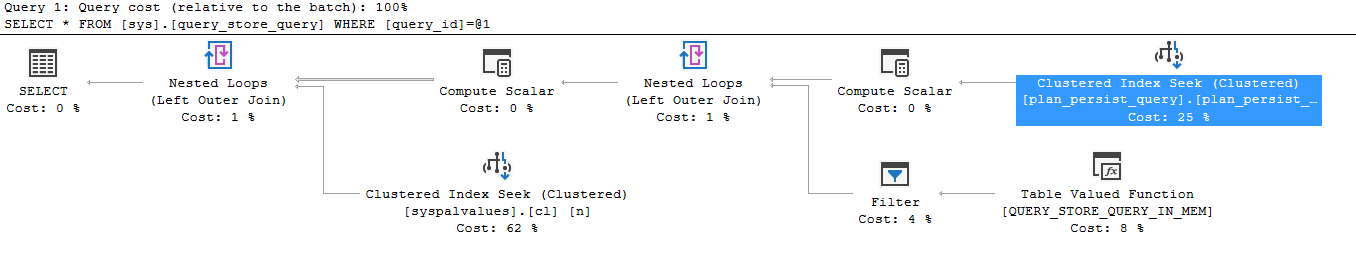

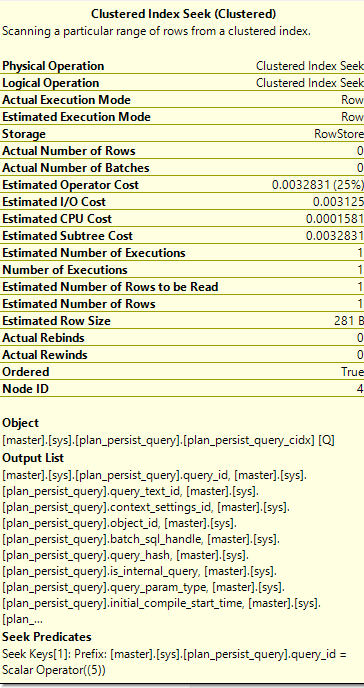

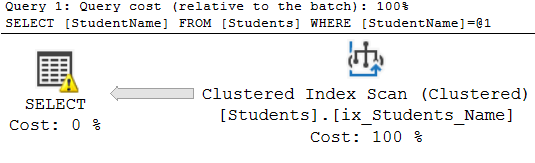

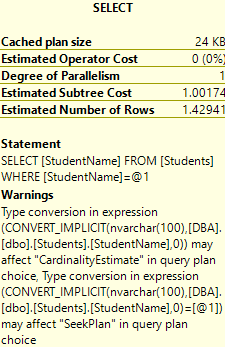

Ah, there’s the warning sign on the left there. Here’s the warning it’s giving us.

Type conversion in expression? What does that mean?

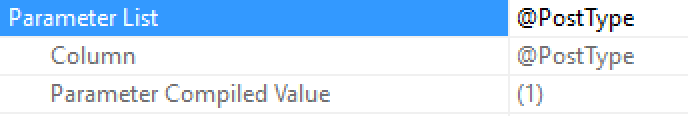

Well, because I used the “N'” prefix around my parameter of Arthur, I was using nvarchar as my data type, and the table’s data type is varchar. When the server received my request, it had to convert the entire column of StudentName into nvarchar before searching the list for the name I sent.

That’s called an Implicit Conversion, and this issue only gets worse as your data grows in size. Often, this issue doesn’t appear overnight. It can exist for weeks or months, just making each query run a little slower. Then in a few years, the query is slow and users start to complain. Since nothing has changed, it’s hard to track down.

Is it worth fixing?

I say absolutely yes. Let’s take a look at this example to see how much it will improve, and then I’ll talk more about the fix in the real world.

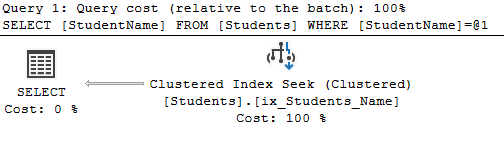

SELECT StudentName

FROM Students

WHERE StudentName = 'Arthur'Table 'Students'. Scan count 1, logical reads 3, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

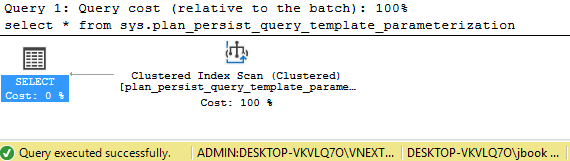

Ah, that’s better. Not only has the warning disappeared, but the index scan in the execution plan changed.

What happens in the real world?

The real world is never as easy or simple as a demo environment like this. If your company is international, I’d argue that the correct choice would be to change the data type of the column to nvarchar. That’s a lot harder than the easy fix in my demo.

At this point, when you have a slow query and there’s an implicit conversion that you believe is influencing the execution plan, it’s time to start asking questions. Start by finding your favorite application developer and ask them how they use the column.

More warning sign posts to come

I want to leave an honorable mention for the other warning signs that show up in execution plans, specifically the excessive memory grant warning. I’ll talk about that in the future, stay tuned!